Principles in Chess Strategy CEOs can Apply to Business

2022 Tech Trends to Increase Business Growth & Resilience

The How & Why of Future-Proofing Your Company

Scaling Up: 13 Roadblocks to Success

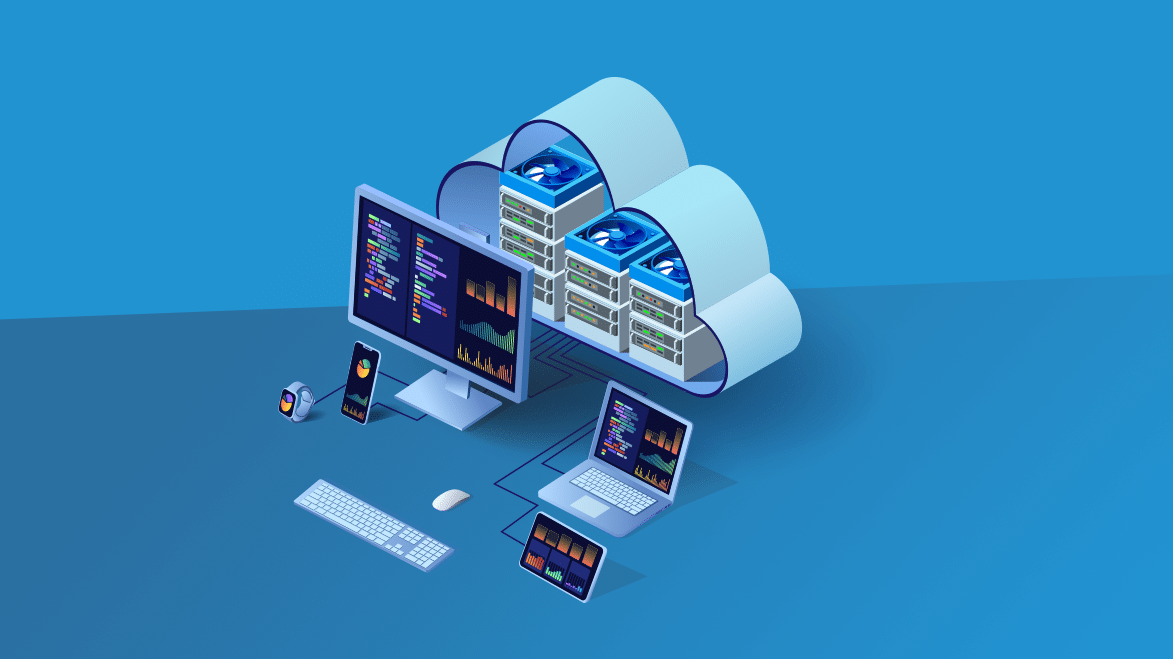

The Six Key Benefits of Using Desktop-as-a-Service (DaaS)

Now, IT engineers can design, develop, and implement a company’s entire IT infrastructure within a cloud environment in no time at all!

Post-Pandemic Business Success is About Renewal

As businesses regain their balance, the leadership must focus on renewal, not recovery, if they want to stay competitive in their market.

Why is Digital Transformation So Important to Sustained Success? (Part 2)

Faster. Nearly 60% of North American enterprises now rely on public cloud platforms, five times the percentage that did just five years ago.

Why is Digital Transformation So Important to Sustained Success? (Part 1)

Virtual Cybersecurity Professionals Needed More Than Ever

Virtual Cyber-security Professionals (VSCP) are here to help your business leapfrog the security challenges it has today.

What’s Going to Save Higher Education?

Harnessing the Power of the Cloud for Business Optimization

Business optimization is the process of making your operations more efficient and cost-effective.

What is 5G and Why Should You Care?

5G is a new digital system for transforming bytes (data units) over air. This new interface uses milometer wave spectrum. Cloud Computing